- Posted On:2024-01-16 12:01

-

895 Views

What do Threads, Mastodon, and hospital records have in common?

It’s taken a while, but social media platforms now know that people prefer their information kept away from corporate eyes and malevolent algorithms. That’s why the newest generation of social media sites like Threads, Mastodon, and Bluesky boast of being part of the "fediverse." Here, user data is hosted on independent servers rather than one corporate silo. Platforms then use common standards to share information when needed. If one server starts to host too many harmful accounts, other servers can choose to block it.

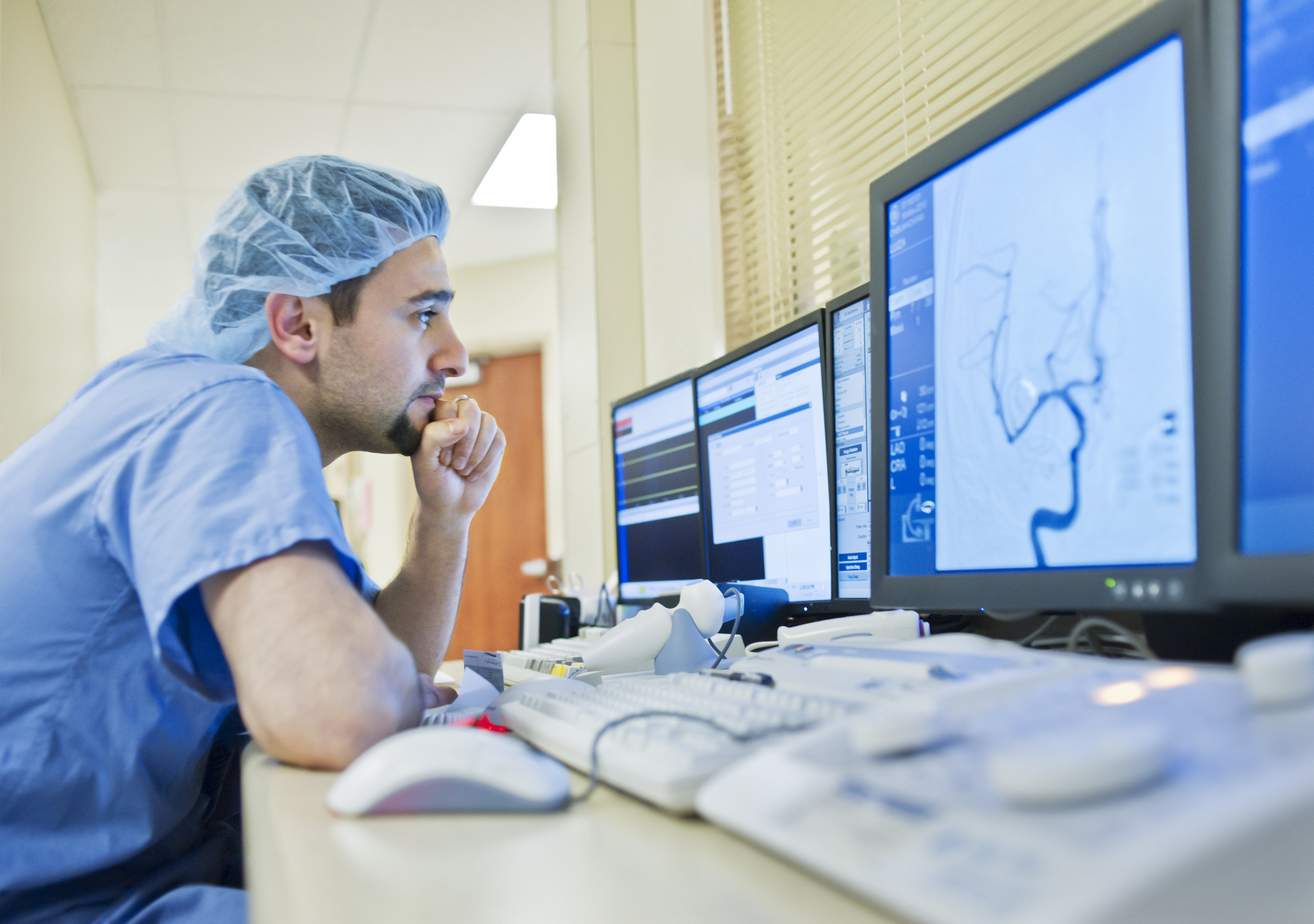

They’re not the only ones embracing this approach. Medical researchers think a similar strategy could help them train machine learning to spot disease trends in patients. Putting their AI algorithms on special servers within hospitals for "federated learning" could keep privacy standards high while letting researchers unravel new ways to detect and treat diseases.

“The use of AI is just exploding in all facets of life,” said Ronald M. Summers of the National Institutes of Health Clinical Center in Maryland, who uses the method in his radiology research. “There's a lot of people interested in using federated learning for a variety of different data analysis applications.”

How does it work?

Until now, medical researchers refined their AI algorithms using a few carefully curated databases, usually anonymized medical information from patients taking part in clinical studies.

However, improving these models further means they need a larger dataset with real-world patient information. Researchers could pool data from several hospitals into one database, but that means asking them to hand over sensitive and highly regulated information. Sending patient information outside a hospital’s firewall is a big risk, so getting permission can be a long and legally complicated process. National privacy laws and the EU’s GDPR law set strict rules on sharing a patient’s personal information.

So instead, medical researchers are sending their AI model to hospitals so it can analyze a dataset while staying within the hospital’s firewall.

Typically, doctors first identify eligible patients for a study, select any clinical data they need for training, confirm its accuracy, and then organize it on a local database. The database is then placed onto a server at the hospital that is linked to the federated learning AI software. Once the software receives instructions from the researchers, it can work its AI magic, training itself with the hospital’s local data to find specific disease trends.

Every so often, this trained model is then sent back to a central server, where it joins models from other hospitals. An aggregation method processes these trained models to update the original model. For example, Google’s popular FedAvg aggregation algorithm takes each element of the trained models’ parameters and creates an average. Each average becomes part of the model update, with their input to the aggregate model weighted proportionally to the size of their training dataset.

In other words, how these models change gets aggregated in the central server to create an updated "consensus model." This consensus model is then sent back to each hospital’s local database to be trained once again. The cycle continues until researchers judge the final consensus model to be accurate enough. (There's a review of this process available.)

This keeps both sides happy. For hospitals, it helps preserve privacy since information sent back to the central server is anonymous; personal information never crosses the hospital’s firewall. It also means machine/AI learning can reach its full potential by training on real-world data so researchers get less biased results that are more likely to be sensitive to niche diseases.

Over the past few years, there has been a boom in research using this method. For example, in 2021, Summers and others used federated learning to see whether they could predict diabetes from CT scans of abdomens.

“We found that there were signatures of diabetes on the CT scanner [for] the pancreas that preceded the diagnosis of diabetes by as much as seven years,” said Summers. “That got us very excited that we might be able to help patients that are at risk.”

What are the downsides?

The method is still not perfect. Plenty of problems remain for both the researchers and the hospitals. For example, although the same AI model is sent everywhere, hospitals might have very different ways of collecting their patient data and may structure it in different ways.

“You have to make sure that your script can run so that your data is harmonized—otherwise, it's [comparing] apples and oranges,” said Folkert Asselbergs, a cardiology professor at Amsterdam UMC in the Netherlands. He has spent years finding ways to develop federated learning for heart research in hospitals throughout Europe.

He says that for federated learning to really take off, hospitals need to use a universal standard for medical data, similar to how the EU has set USB-C as the standard port for electronics.

But right now, even different brands of medical devices can record medical data differently. And that doesn’t even cover cases where doctors just write down information as plain old text.

Fortunately, that problem seems to be solved by another recent trend. Using natural language processing, many modern algorithms are able to "read" the reports written by doctors and convert them to the format needed for databases.

And just like all those new social media platforms, federated learning doesn’t provide a 100 percent guarantee of security when sharing AI models. Someone could still instruct the algorithm to collect patient names in the database and send them to the central server, for example.

Asselbergs said this is where doctors should have the biggest role, acting as gatekeepers for the data. They can check the instructions for the algorithm and audit the output data before it leaves the hospital. If they don’t like it, they can shut off their database to the federated network.

Is this in hospitals now?

It’s still early days. “Federated learning is still in a research setting only,” said Asselbergs. That said, he has noticed it catching on in research circles—federated learning has popped up in conferences he has been to in the past few years.

Summers feels there’s still a long way to go, and federated learning is not as automated as you might think. “In most cases, you still have to curate the data at the individual sites,” Summer said. This means that getting more hospitals on board is a slow process. “You need a champion at each site to find accurate labels of the data.”

But these are problems that can be fixed, said Asselbergs. In the future, doctors could first note what they find in patients and then use AI and federated learning to help them make the best decision, he said.

“You still have the human in the loop for validation, but you're not depending on the human” for diagnosis, he said. “We just have to make sure that the system works for them instead of the other way round.”

Fintan Burke is a freelance science journalist based in Hamburg, Germany. He has also written for The Irish Times, Horizon Magazine, and SciDev.net and covers European science policy, biology, health, and bioethics.